The Rise and Fall of Lian Li Aluminum PC Cases - Nifty Thrifties

Here in Userlandia: Lian Li and the Case of the Aluminum Computer Case.

When you're hunting for old junk, it's best to keep your expectations low, so that you can be pleasantly surprised. As the Onion warned us way back in 1997, people love retro just a little too much, which can make it hard to find bargains. The truly rare and expensive stuff gets filtered out and shipped to warehouses for listing on online auction sites. The stuff that's good but not mind-blowing gets placed in glass cases at the front of the store, tagged with, let's say optimistic prices sourced from Buy It Now listings. I might be willing to pay $25 for that SCSI slide scanner… if it wasn’t missing its power supply. Nevertheless, you sometimes find gold among the dross. Gold… or other metals.

I visited the Goodwill in Hudson, New Hampshire one frigid February afternoon expecting to find nothing of importance. This store is a bust more often than not, but it’s on my drive from Salem to Nashua, so I might as well stop to investigate. To my amazement, there in the new arrivals section was a new-in-box Lian Li aluminum PC case. Lian Li made some of the highest rated cases of the early aughts, so this was a nice find indeed. The box top was already cut open for easy inspection, and the case looked brand new. All the accessories were included and it was still wrapped in a protective plastic bag. Even the original shipping label was intact. And it was tagged at thirty dollars? That felt awful low. If I’d bought this new in 2003, it would have set me back $180—that’d be $280 today, thanks to our friend inflation. Even now, it'd still cost $100 on a used gear site, and that's not counting $50 for shipping. I wasn’t expecting to spend $30 on a computer case that day, but a deal like that, for a quality PC component like this, doesn't show up very often.

Doesn’t look fancy on the outside…

Maybe they were fooled by the box. With its bold fonts, solid colors, and starburst badge shouting “Pentium 4 Compatible,” the packaging looked like something you'd make in your first-year graphic design class. “C+, solid effort.” Someone at Lian Li decided to cut costs that year, because although they shelled out for color printing… they only had one box design. And they used it for far more models than they should have.

But mint on the inside.

I'm not criticizing their translation—“details are various from different models” might not be perfect English, but it's perfectly clear. You and I both know exactly what they meant to say—which is the problem. Each box had an extra label on the side, with the model number and specs. My Goodwill find was a PC-6077—which you'd never know just from looking at the box, which showed a PC-60. While this strategy probably saved Lian Li some production costs, it probably also caused countless headaches in the stock room at Micro Center. Regardless, I can’t judge a box by its cover. Can this chassis hold up to twenty years of hindsight? Let’s start with its exterior design.

Exterior Design

The PC-6077 in all its brushed metal glory.

Lian Li’s trademark silver fuselage still looks great twenty years later. A brushed aluminum finish stands out amongst the ranks of mostly beige, white, and sometimes black painted boxes of the early 21st century. But as unique as it was in the computer market, this style isn’t exactly original. Plop a silver Lian Li case next to my grandpa’s Onkyo stereo system from the 1970s and the resemblance is uncanny. Brushed aluminum was being used in A/V equipment and appliances for years before PC case makers decided it was cool. If the retro hi-fi style wasn’t to your taste, Lian Li also offered their cases in a subtle anodized black finish. Still, if you want people to know you have a fancy aluminum case, that brushed metal look shouted it from the rooftops.

The aluminum case craze started in the year 2000 with the Cooler Master ATC-200 and Lian-Li’s series of cases. Cooler Master claims they made the “first aluminum PC case” with the ATC-200, but corroborating that claim is difficult. Lian Li had started their aluminum rack mount computer case business back in the eighties. But rack frames, rack mount server cases, and rolling server cabinets weren’t on the front page of the Tiger Direct catalog in the Web 1.0 days. The earliest documentation and references I could find for both manufacturers’ consumer aluminum cases are dated sometime in late 1999. My hunch is that Cooler Master’s case debuted in the USA first, while Lian Li was first shipping in Asian markets. In Ars Technica’s Case and Cooling forum, there are posts raving about the ATC-200 dated a few months before the first Lian Li thread. Without more definitive proof—and a manufacturer saying “we did it first” doesn’t count—I’ll give this one to Cooler Master.

Enough history—let’s get back to the case design. And there’s one word I’d use to describe that design: classy. There’s no colorful look-at-me LED or fluorescent lighting. There’s no overwrought curves and flourishes. Touching it feels classy, like the aluminum dashboard trim in a sports car. Even the front bezel is made out of aluminum. Most case bezels are plastic, and factories back then had trouble matching the color between painted metal and plastic. Brushed aluminum dodged that problem entirely. Still, Lian Li couldn’t avoid using some plastic, with satin black strips adorning the top and bottom of the bezel. Shame they weren’t carbon fiber like some other Lian Li models. Still, they complement the brushed metal finish and fit the classy aesthetic. I understand why these are plastic—they’re the part of the bezel that actually clips to the frame. It’s easier and more reliable to make these bits out of plastic, so it’s just the right material for the job.

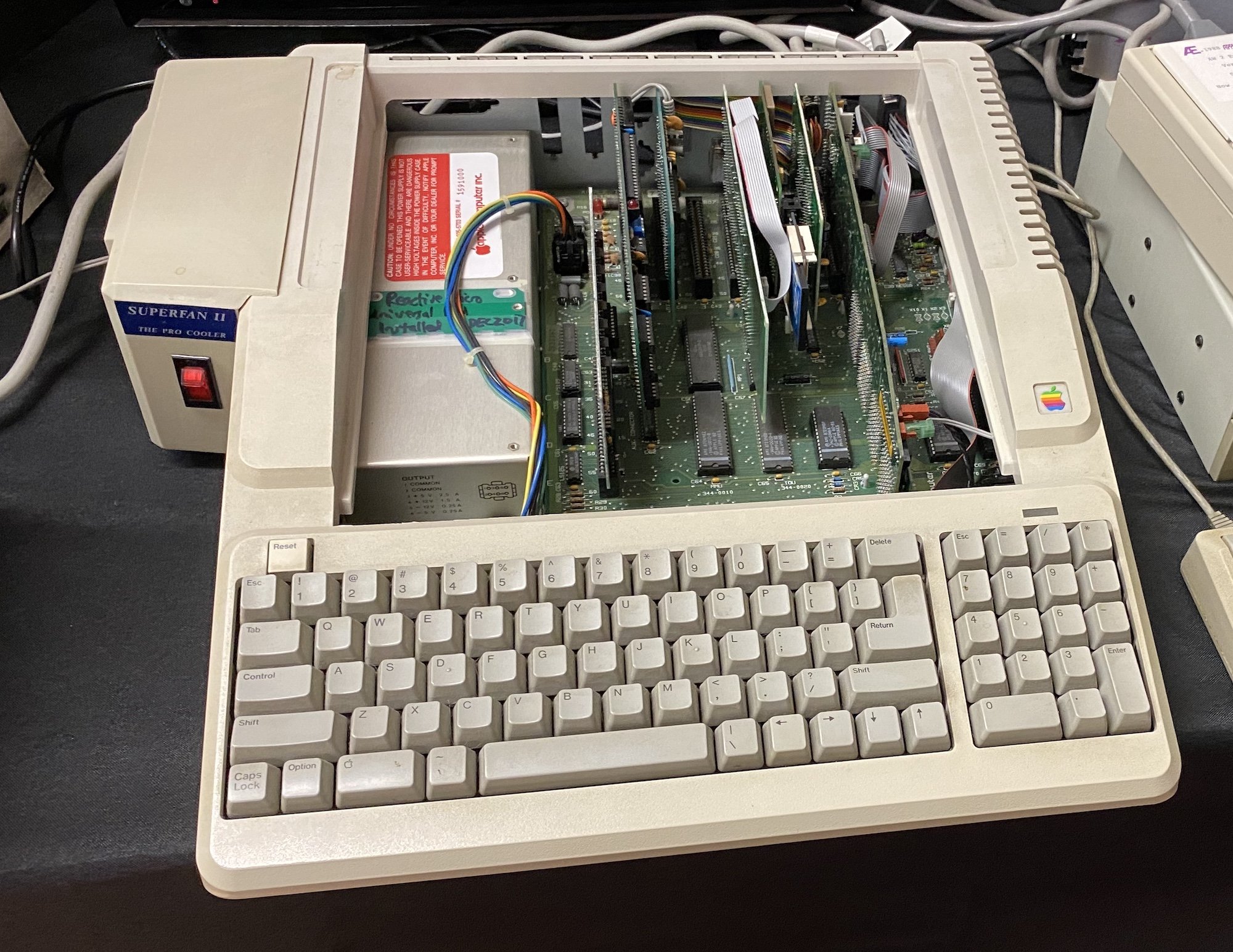

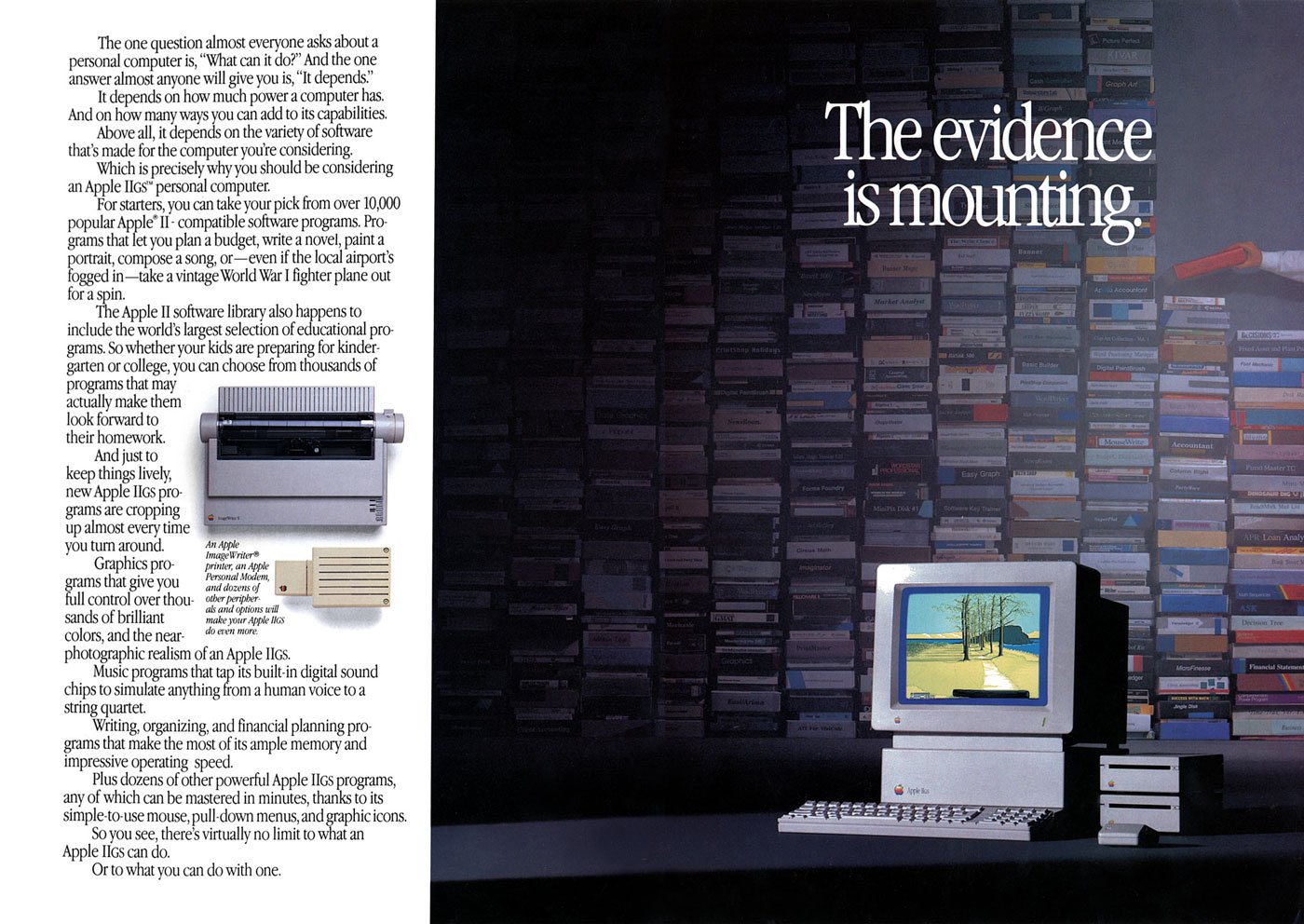

Speaking of the front section, the most interesting design decision isn’t just for looks. An array of nine 5 1/4” drive bays sets this case apart from its competition. Yeah, that’s right—nine 5 1/4s. Most PC cases of the era had several different types of drive bays: external 5 1/4” for optical drives, external 3 1/2” for floppies or other removable media, and internal 3 1/2” for hard disks. Most mid-tower cases arranged these bays in a 4-3-3 setup. To get more than four 5 1/4s you usually had to step up to an enormous full-tower case, like Lian Li’s PC-70 or the Chieftec Dragon.

Using all 5 1/4s wasn’t exactly a new idea. Just like the brushed aluminum finish, this drive bay setup was a retro throwback. The original IBM PC only had 5 1/4” bays, as did many clones. Granted, they were arranged horizontally, but you get my drift. Over the years as hard drives and floppy disks got smaller, PC manufacturers traded some 5 1/4” bays for more 3 1/2” bay. But the all-5 1/4 setup didn’t vanish—it migrated to server builds, where hot-swap drive cages fit perfectly into 5 1/4” bays. Other manufacturers would also experiment with an all-bay setup—the Cooler Master Stacker, Antec Nine Hundred, and Thermaltake Tai Chi, to name just a few.

I actually dig the aesthetics of the uniform bay approach. There’s a nice symmetry to it—take out all the bezels and brackets and the front of the case has an opening perfect for any of the lovely items in Lian Li's catalog full of accessories—or, if you really insisted, something made by another company. Included with the case were aluminum trim covers for optical and floppy drives, which blend their usually beige bezels into the box. If you needed more, they were just an online order away. Fan controllers, hard drive cages, and fan mounts were also on tap, and given enough coin you could build a clean, all-aluminum computer.

You’re free to put anything anywhere, so long as you can mount it.

But as classy as my thrift store treasure is, I've discovered a few flaws in its design. First up is the front panel I/O door. USB, FireWire, and audio connectors are hidden behind one of the cheapest feeling mechanisms I’ve used in a long time. It works… technically. but it just flaps around with clanky metal sounds. Despite being made out of aluminum like the optical drive trim plate it doesn’t have the same smooth feel because there’s no levers or springs or anything to dampen the opening. I would have preferred exposed, flush mounted ports instead. At least put a little engineering effort into the door instead of this pitiful excuse for a hinge.

Next, the power and reset buttons are built into the stock 3-in-2 bay hard drive cage. This isn't all bad: you can move it from the standard location at the bottom of the case to anywhere, really. If you’re not happy with the default location at the bottom of the case, you can move it to the top or middle. But that’s the only positive thing I can say about it. Both the power and reset buttons are spongy and noisy. The spring's noise reverberates into the trim plate—and it's a high-pitched little thing, just enough to be annoying instead of a satisfying click. It’s what they’d call “bad switchgear” in the car business. It's amazing how cheap it feels, really—you turn a computer on and off all the time. If you bought this case back in the day, you spent a lot of money on it, and you wanted buttons that felt expensive—or at least normal. Lian Li did such a good job on everything else that this bit of chintziness stands out. There’s plenty to say about the wimpy 80mm fan, but that’s better saved for later when we talk about cooling. This case’s full-tower brother, the PC-7077, offered a 4-in-3 bay cage with a 120mm fan instead—it should have been in this case too. You could order one from Lian Li if you really wanted one, but that shouldn’t have been necessary. The buttons and LEDs should have been built into the top or side of the bezel.

Last is the unfortunate fact that actually utilizing those 5 1/4” bays gets ugly—literally. Add a single drive or accessory that doesn’t match and now your silvery ingot is blemished with a beige bruise. Opting for the black anodized finish minimized the problem because many aftermarket accessories came in black, but what if you wanted the all-shiny experience? The Lian Li accessories catalog was right there, full of drive kits, fan grilles, and trim covers, but your wallet wasn’t going to like the prices. So if you wanted the brushed metal case, and you cared about aesthetics, you had to go all-in.

Internals and Build Considerations

Of course, aluminum cases aren’t just about aesthetics. Consider the era in which these Lian Li, Cooler Master, and other “premium” boxes debuted. It was the turn of the millennium, and the market for do-it-yourself PC building was growing rapidly. Online parts sellers made it easier than ever to buy the exact components you needed for your ultimate computing machine. LAN parties drove demand for better looking equipment to impress onlookers. Processors and graphics cards were breaking performance records, but they needed better cooling to do so. Enthusiasts who in the past might have upgraded one or two components from a prebuilt system were now building entire PCs from scratch. Consequently, these builders suffered from the numerous design flaws of contemporary case construction. Nerds everywhere cursed as they cut their fingers and scraped their knuckles working inside cases that hadn’t changed much since the eighties. After years of casual casualties, they called for an end to difficult and cramped PC cases.

An obscure Zen Buddhist scholar named Steve Jobs once said that design isn’t how something looks and feels, it’s how it works. So if you spent the extra money on one of the new wave of premium PC cases, did you actually get a product that worked, or did it just look nicer? Let's take a look inside and see if beauty is more than bezel deep. First thing to do is pull off that front bezel, which is easy thanks to a convenient cutout along the bottom edge. Many cases of the nineties required the removal of side panels and interior parts to detach their bezels, so this is already a good sign. More nice touches include large thumbscrews that secure the side panels, motherboard tray, and power supply bracket. There’s no trick latches or breakable clips on the side panels—they slide right out with no hitches or hiccups. Anyone who’s struggled with opening an intransigent side panel or shell will appreciate this smooth action.

You might be thinking “Don’t all PCs have removable side panels and bezels?” And that’s true, but you need to consider them in the context of the whole case. These boxes aren’t just supposed to look good—they’re supposed to feel good. You know what feels good after removing your side panels? Finding a slide-out motherboard tray and an external power supply bracket. Assuming you're the sort of person who removes the side panels from your computer cases, anyway. Lian Li didn’t invent any of these features, but they implemented them in a thoughtful way.

Let’s start with the power supply bracket. In most cases—pun intended—a power supply was fastened directly to the frame with some screws. Sometimes creative hand gymnastics were required—hold the supply with your right hand, turn the magnetic tipped screwdriver with your left, and hope you've screwed it in… and not screwed it up. There might be a little flange that helps hold the power supply in place, but that’s not guaranteed because I’ve repaired several machines that lacked PSU supports. I’m sure there’s lefty builders out there chuckling at this—they had an advantage for once! And that's just for installation. Removal could be even trickier, if you'd added in one of those giant Zalman flower heatsinks or some water cooling. An external bracket neatly solves these problems. After attaching the bracket to the PSU, simply slide it and the cables into the case. Removal is just as easy.

But that power supply bracket is just the opening act—the real star was the slide-out motherboard tray. Though most mid-tower cases had a sensible amount of space inside, you were still working inside a box. Sliding out a motherboard tray is like dropping the engine from a car to replace the cylinder heads—it’s easier to do complex mechanical work in an open space. Less risk of scraping your knuckles on the drive racks when installing a motherboard or CPU if you’re working outside the box. Power supplies and their wiring don’t get in the way of tightening heatsink screws. Did you drop a screw or jumper? No worries—just tilt the tray to one side and grab it. You could even open-air bench test a system if you were feeling frisky. For most users this is a one- or two-time convenience. But then, 'most users' weren't the target market here. The people who bought these cases were tinkerers, and tinkerers loved these trays. Case modders were always moving boards in and out of their project boxes. You don’t want delicate electronics inside your case when you're cutting holes in the side or constructing custom water cooling loops. True, you won't get a tsunami, but a few ounces of coolant in the wrong place can kill a machine—or a very unlucky tinkerer. You did remember to unplug everything first, right?

I can already see the tweets asking “if removable trays are so great, why have they vanished from most modern PC cases?” My gut says there’s two reasons for their disappearance. First, there’s good old fashioned bean counting. A removable tray is extra complexity of the mechanical and manufacturing varieties, and that’s not free. Like I said earlier: most users aren't tinkerers. If the tray is just a one- or two-time convenience, maybe it's more economical to spend those engineering resources elsewhere. Second, a fixed tray is better for a case’s structural integrity, especially when there’s a cutout for processor heatsink backplates. A few modern cases like the beQuiet Dark Base still have a removable tray, but instead of a slide-out design, it’s reversible. Only a few screws stand between mounting your motherboard on the left- or right-hand side of the case. You know, so you can put your PC on the left- or right-hand side of your desk and still ogle your RGB LED light show.

With the motherboard tray and power supply bracket removed, we’re left with the PC-6077’s frame, power supply shield, and the drive bay rack. These are all riveted together, since welding aluminum is more complicated than welding steel. A lack of sharp edges and some strategically placed plastic trim meant no more cut fingers and cursing fits. Hard drives and optical drives are secured by ordinary screws, not tool-less clips or rails. However, the hard drive cage does have rubber grommets to insulate the case from spinning disk vibrations. Aside from being made from aluminum, the construction is on par with other high-end cases of the time.

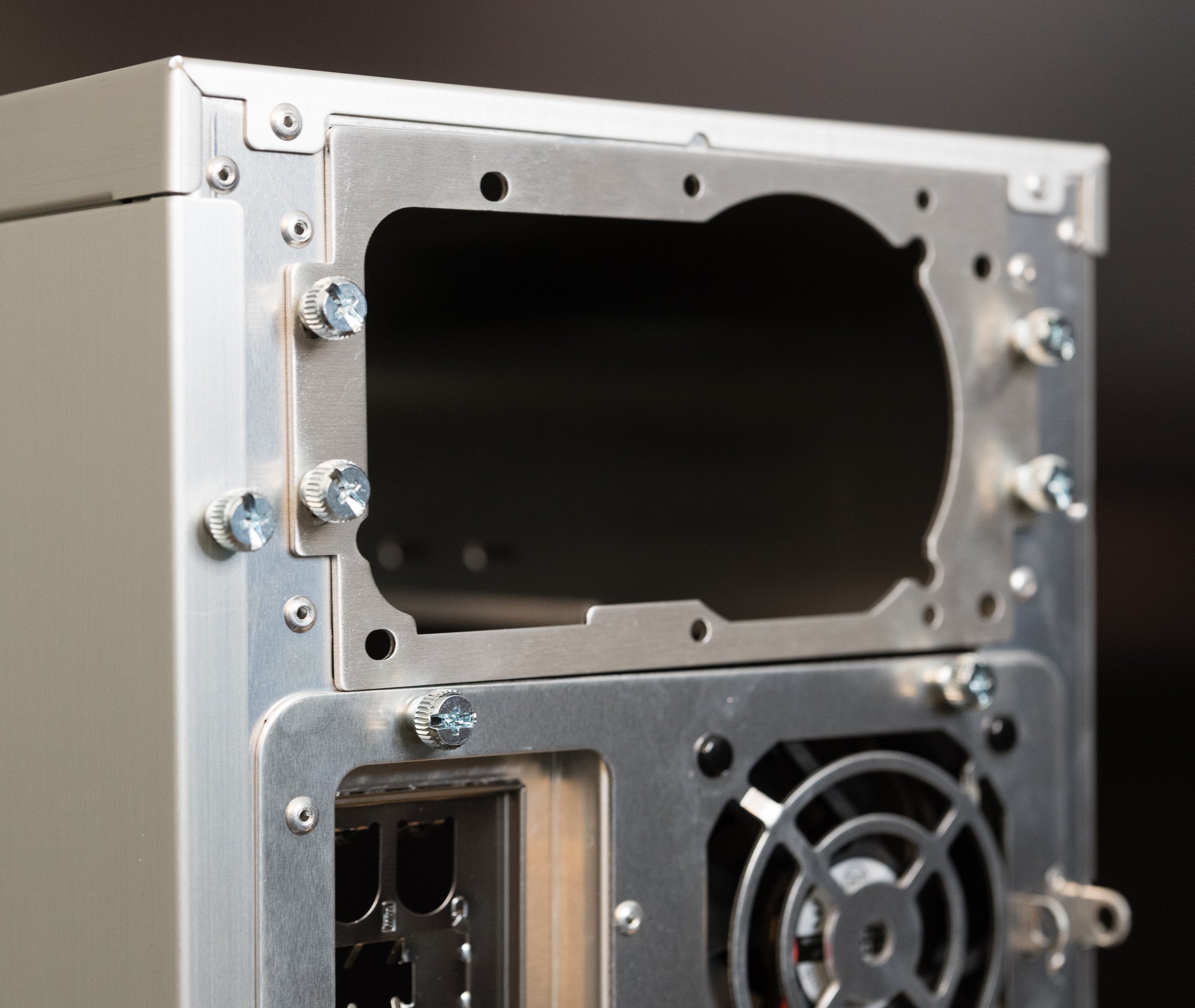

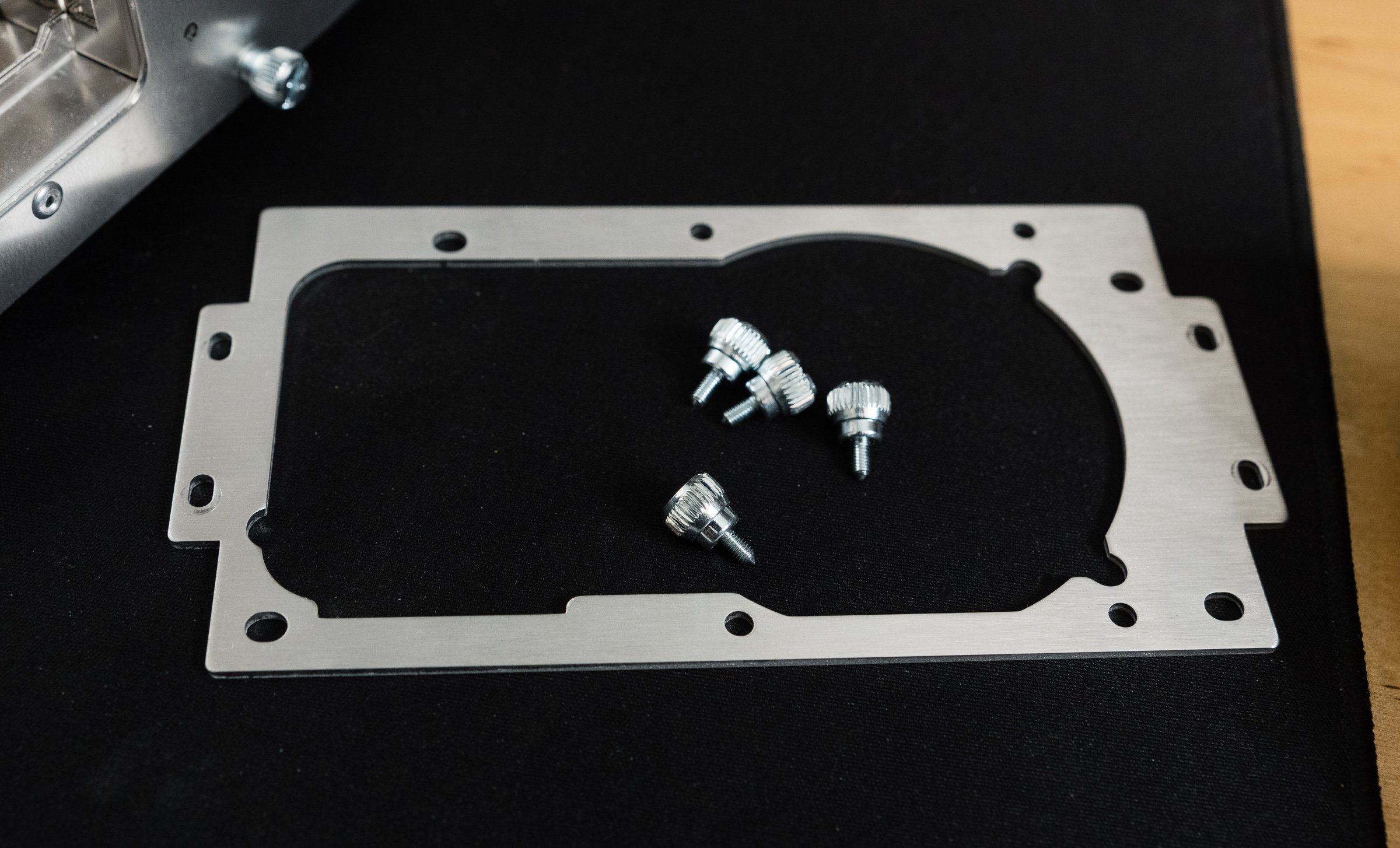

The PC-6077’s 3-in-2 hard drive cage.

The Pros and Cons of Aluminum

That aluminum structure feels solid and sturdy, but also quite light—which, granted, is the whole point of aluminum. My postal scale weighs the PC-6077 at around thirteen pounds empty. Most steel mid-towers of the era weighed around twenty pounds or more, but that weight savings comes at a price. Aluminum objects have always been more costly to manufacture than steel ones—Wikipedia will tell you more than you want to know about the Hall-Héroult process. But if you’re lugging your liquid-cooled Pentium 4 to the LAN-o-rama every Saturday, you’d happily pay the difference to cut your computer’s tonnage. Lian Li could have made the PC-6077 even lighter if they used more plastic, but the few ounces saved wouldn’t be worth losing perceived quality. That strategy was reserved for their entry level products, like the PC-10 which used a plastic front bezel.

Cost wasn’t aluminum’s only downside. On the one hand, it's ductile, which encourages modifications! On the other hand, it's ductile, which makes it vulnerable to flexing. Anyone who used a PowerBook or MacBook Pro before the unibody era knows just how bendy aluminum computers can be. Just take off the side panels and you can feel them flexing with normal handling. There are plenty of sad posts on forums from people who dented their cases with an errant foot, or dropped them and actually bent the frames. If you want more structural rigidity, you need to add more weight - which defeats the purpose of buying an aluminum case to begin with.

Aluminum’s lower density had another unintended consequence: noise. I've got some experience in voiceover, and I can tell you, mass plays an essential role in soundproofing. A lighter aluminum case absorbs less sound than a heavier steel one. A few overlapping metal pieces inside the case like the power supply frame and drive bay frame aren’t riveted together. Two of the three included fans are mounted using plastic rivets, which are better than screws but worse than isolated rubber mounts. Those rubber grommets I mentioned earlier from the hard drive bays were thin, and easily compromised. All of this adds up to a case that is susceptible to vibrations, sympathetic or otherwise.

None Like It Hot

“But my case doesn’t rattle or vibrate,” you say. Well, that’s great, but there’s another factor that impacts the acoustic qualities of a case: cooling. Whether it's case fans, heatsink fans, or radiator fans, it’s always been a challenge to build the fastest computer that’s also the quietest. What would you say if I told you that Lian Li has your best interests in mind? Why, right on the side of the box it says in big, bold letters that “Lian Li aluminum cases release heat faster than other cases!” Hey, they used a bold font—it must be true! But even if it was set in a different font, it’s still a bold claim by Lian Li. It’s easy to prove that an aluminum case is lighter—just put it on a scale. But proving that an aluminum case cools better? That’s more complicated.

They wouldn’t lie, would they?

Aluminum is a common heatsink material because it’s a good conductor with decent thermal capacity at an affordable price. Car radiators and AC evaporators are made out of aluminum. PCs were already using aluminum heatsinks on various chips—so why not make the case out of aluminum too? Posters on usenet and web forums extolled the benefits of an aluminum case for improving cooling performance, because SpeedFan showed lower temperatures after transplanting their builds into a shiny aluminum tower. “That proves it,” they’d say, like Philip J. Fry watching blurry, grainy videos of Bigfoot.

But as smart as PC nerds like to think they are, sometimes they forget that correlation doesn’t equal causation. These claims are all marketing hype. Aluminum might be a good conductor, but you know what isn’t? Air. Heatsinks need to touch components to actually sink the heat, and there’s usually some kind of thermal compound binding them together. So if the case isn’t touching any hot components, it’s not actually cooling them. I can already hear the next counterpoint: “But wouldn’t the case material absorb heat from hot case air?” I suppose it could, but let’s think about that for a second.

Heatsinks and radiators use mass and exposed surface area to exchange heat with air, and they have a certain amount of thermal capacity before saturation. That capacity can be increased in three ways: more mass, more surface area, or more airflow. There are some cases designed to be passively cooled, like the Streamcom ST-DB4, but the case itself is a giant finned heatsink directly connected to hot components. The PC-6077 doesn’t do any of that, and like a normal steel case its thermal performance is at the mercy of airflow. I don’t know about yours, but my cases obey the laws of thermodynamics.

Cooling wasn’t exactly priority number one for most case makers. Most PC cases of the late nineties had one rear case fan working in tandem with the power supply fan. As these fans exhausted hot air from the interior, fresh air was pulled in from vents at the front of the case. When faced with serious warmth—say, from a Pentium 4 processor and a Nvidia GeForce FX graphics card—this cooling setup couldn’t beat the heat. Consumer PCs needed more fans that could move more cubic feet of air through the case to cool more effectively.

We could shove so many fans in there!

The truth is that Lian Li's claims about releasing heat had nothing to do with the case's aluminum construction. Their cases cooled better because they included more preinstalled fans. More fans means more airflow which means more cooler, in that Tim Allen sort of way. The PC-6077, like most cases of the early aughts, had mounts for multiple intake and exhaust fans. Three 80mm fans were included in the stock configuration: an intake fan up front, an exhaust on the motherboard tray, and an exhaust at the top of the case. The power supply’s fan made four in total. This created a negative pressure system, which was sensible for most builds of the time. But PC enthusiasts back then were just like the PC enthusiasts of today—they wouldn't settle for sensible! Fortunately—for a given value of “fortunately”—the PC-6077 was pretty flexible when it came to cooling. Those beautiful, wide open drive bays were perfect for adding extra fans, and Lian Li was more than happy to sell you drive cages with fan mounts. Oh, and look—there’s one more 80mm mounting spot on the motherboard tray, just perfect for adding another fan!

What about the competition? Cooler Master’s aluminum cases had similar fan mounting options. Chenming’s model 601—which you might know better as the Chieftec Dragon, the Antec SX1030, the Thermaltake Xaser, or the case used by Alienware—had multiple front and rear fan mounts along with side panel fan mounts. So that means they all have fantastic cooling right? Think again. Some cases with lots of fan mounts only had one, maybe two fans installed, and they might not have been installed in optimum positions. A critical examination of these enthusiast cases—including Lian Li’s—show that most manufacturers just shoved fans in their cases with no real consideration for fluid dynamics.

Talk about a choked-off intake.

Look at the intakes—they’re choked by layers of of metal gratings, foam filters, and narrow bezel vents. That’s not all—the intake fans are sandwiched on the other side by hard drive cages! Whatever air that’s lucky enough to make it past the drives has to contend with a jungle of ribbon cables and power wires. At least exhaust fans were positioned near the CPU, and some OEMs were smart enough to install dual 80mm or a single 120mm fan to really suck out the air. But let’s say for the sake of argument that there were no blockages or cables or restrictions. The exhaust fans aren’t in line with the intake fans, which means there isn’t a straight path for air to move through the case. The result is a case riddled with turbulence and dead zones, where fans have to work harder—and therefore louder—to cool your computer.

When it came to acoustics, fans back then were kinda… meh. Pulse-width modulated variable fan speed was still years away. Four 80mm fans spinning at a constant two to three thousand RPM meant these suckers were loud. Good thing there’s plenty of bays in the PC-6077, because you’ll need a fan controller to dial things back when you don’t need maximum power. But be careful, because even ball-bearing fans could make mechanical noise at certain speeds. Multiply the mechanical noises by reverberations in the case, and you’ve got a computer cacophony. Before you know it you’re reading SilentPCReview.com and testing all the various isolation mounts to see which combination worked best.

Thermals are even more important today than they were twenty years ago, and PC case makers have largely caught on to what works and what doesn’t. There’s still duds out there, but it’s pretty easy to filter them out thanks to the Youtube Tech Personality Industrial Complex. The same market pressure that forged the aluminum cases of the early aughts is still pushing manufacturers to make quieter, cooler chassis…es…es with better functionality today.

This Old Tower, Today

So what’s left to do with this like-new PC-6077? The obvious idea is to fill it with vintage parts and make it a Windows XP gaming beast. Yes, an Athlon 64 X2 with a GeForce 6800 Ultra would be right at home, serving up some Battlefield 2 with a side of SimCity 4. Install a Fanbus, a SoundBlaster Audigy control panel, dual CD/DVD burners, and a removable hard drive carrier and you’ve got the classiest gamer box on the block… assuming you still live in 2005.

But what if you wanted to stuff a modern computer inside? Some would cry sacrilege, but I know people who’ve used and re-used their Lian Li cases for over a decade. I don’t think it’s that crazy of an idea, especially for a platform like the PC-6077. Lian Li’s appeal to the 5 1/4 lovers makes it remarkably easy to convert this case into an airflow-focused silver sleeper. Yanking out all of the trim covers and blanking plates gives you plenty of room to do whatever you want. Fit some 120mm fan adapters and replace the stock 80mm fans with Noctuas and you have airflow competitive with most modern cases. If you feel up to the task, there’s enough room to 3D print or fabricate a dual 140mm fan bracket. Fit a mesh front covering into the bezel and you’d make something that could blend right in with modern airflow oriented cases.

You’ll run into other issues, of course. Closed-loop liquid coolers aren’t an option without fabricating a bracket to mount them into the drive bays. You could take a page from the LAN partiers of yore and build a custom open-loop liquid cooling system. Many medium to large sized air coolers will fit within the PC-6077’s confines, like Cooler Master Hypers, Noctua NH-U12s and beQuiet Black Rocks. But the truly massive air coolers, like the Noctua NH-D15, won’t stand a chance. Modular power supplies mitigate the cable management problems somewhat, since you can just omit the cables you don’t need. Still, cleanly routing the PCI Express power, 24 pin ATX, and the EPS 12 volt cables will take some—no, all of your cunning. Stick to NVME solid state drives and you won’t have to worry about any SATA power or data cables. If you plan your build carefully, you could conceal a killer modern system in this twenty year old shell and have a PC that looks like nobody else’s.

The G5’s thermal design was a benchmark for other systems.

Yet the only fully aluminum cases on Lian Li’s website these days are a few small form factor boxes—fully-aluminum tower cases are nowhere to be found. So why did Lian Li stop making cases like this? There’s two factors for the decline of the fully aluminum mid-tower case. First, other companies used steel to build better designs, with more features, for half as much. Meanwhile, Lian Li spent too much time imitating the Power Mac G5, and not enough time innovating. Yes, there was a demand from PC users for cases that looked like the G5 or Mac Pro, because nothing looked like G5 cases. Apple had learned their lesson about hot components and bad acoustics with the Mirrored Drive Doors Power Mac G4, and had gone back to the drawing board to solve their problems with a clean sheet design. Thus the Power Mac G5 got a brand new case design with straight-through airflow and dedicated thermal zones, which made for a quiet, high performance computer. Lian Li’s PC V-1000 might have looked like a G5, but just because something has a cheese grater front panel doesn't mean it works like a G5. The V-series sold well, but Lian Li mortgaged their future by copying Apple.

The second factor that spelled doom—no pun intended—for aluminum cases was the decline of the LAN party. Home internet got fast enough that most people had a good enough time blasting their buddies without departing their desks. If you’re not moving your computer around all the time, you don’t care as much about saving weight. The extra money spent on an aluminum chassis could be spent elsewhere, like on more fans, liquid cooling, or RGB LED lights. After all, who cares about subtlety when you can put on a light show that rivals a Pink Floyd concert? The remaining buyers who valued weight savings could buy even smaller and lighter aluminum Mini-ITX small form factor cases. Mini-ITX has its own compromises, but the finished product saves a lot of space. If you have to move your computer around a lot, why not just make it as small as possible?

To its credit, Lian Li diversified long before the collapse of the market by creating the Lancool series of steel cases in 2009. Lancool catered to cost-conscious buyers while Lian Li continued to sell aluminum boxes to their traditional enthusiast clientele. Even as other manufacturers abandoned the aluminum case market, Lian Li doggedly stuck to it. Unfortunately, Lian Li abandoned their fully aluminum product line in the mid-2010s. Current Lian Li cases like the 011 Dynamic are steel frames with aluminum accents or panels. They still make a few aluminum small form factor cases—check out their collaboration with Dan Cases for some neat mini-ITX designs—but those are now rare exceptions. Most builders who valued the classy looks and functional design of Lian Li migrated to companies like Fractal Design, whose Define, Meshify, and Torrent series of cases are beloved for both gaming PCs and workstations.

Still, it’s remarkable that this old case can competitively cool a modern system with only a few minor upgrades. Someone could have bought a PC-6077 in 2003 and used it for their primary build for twenty years, which isn’t something you can say about most of its contemporaries. It seems like a happy accident that the all-bay design actually made it future-proof despite the obsolescence of 5 1/4” drives. During my research I found all sorts of forum and Reddit posts looking for cases just like this. Storage box builders are settling for used cases to fill with hot swap hard disk cages because the modern case market is leaving them high and dry. Server cases—then and now—are just too expensive and there’s no new mid-towers with lots of 5 1/4” drive bays. That’s why prices are still fairly high on eBay, and why I was shocked to find one at a thrift store. Sometimes fortune smiles upon thee, and this case will serve an honorable role as a vintage powerhouse. That is, once I decide what to put inside it.