Let Macs Control Apple TVs

If you have an Apple TV and an iPhone, you might be familiar with the Apple TV Remote app. It used to be a standalone application until Apple moved its functionality to Control Center in iOS 12. After pairing with the Apple TV, all the functions of your remote control are now available on your iPhone. If you like swiping around to navigate an interface, I suppose you’d like the bigger trackpad surface. It’s also great to have another way to control an Apple TV without shelling out for another remote, just in case that slippery thing goes missing. Or if you just don’t like the “which way is up” Siri remote, that’s fair too.

Remote control is also available on the iPad, and there’s a cut-down version on the Apple Watch too. Even HomePods can control Apple TVs via Siri. But for some reason, Macs can’t remotely control an Apple TV. Macs can’t fast forward, adjust the volume, or queue up the next episode of JoJo’s Bizarre Adventure. Apple has yet to publish a standalone app, menu extra, or control center doohickey that’ll put your Mac in control of your TV. I imagine a Mac wouldn’t be somebody’s primary remote control, but having the ability to send commands from a Mac could be useful in other ways. Imagine Shortcuts remotely controlling your Apple TV.

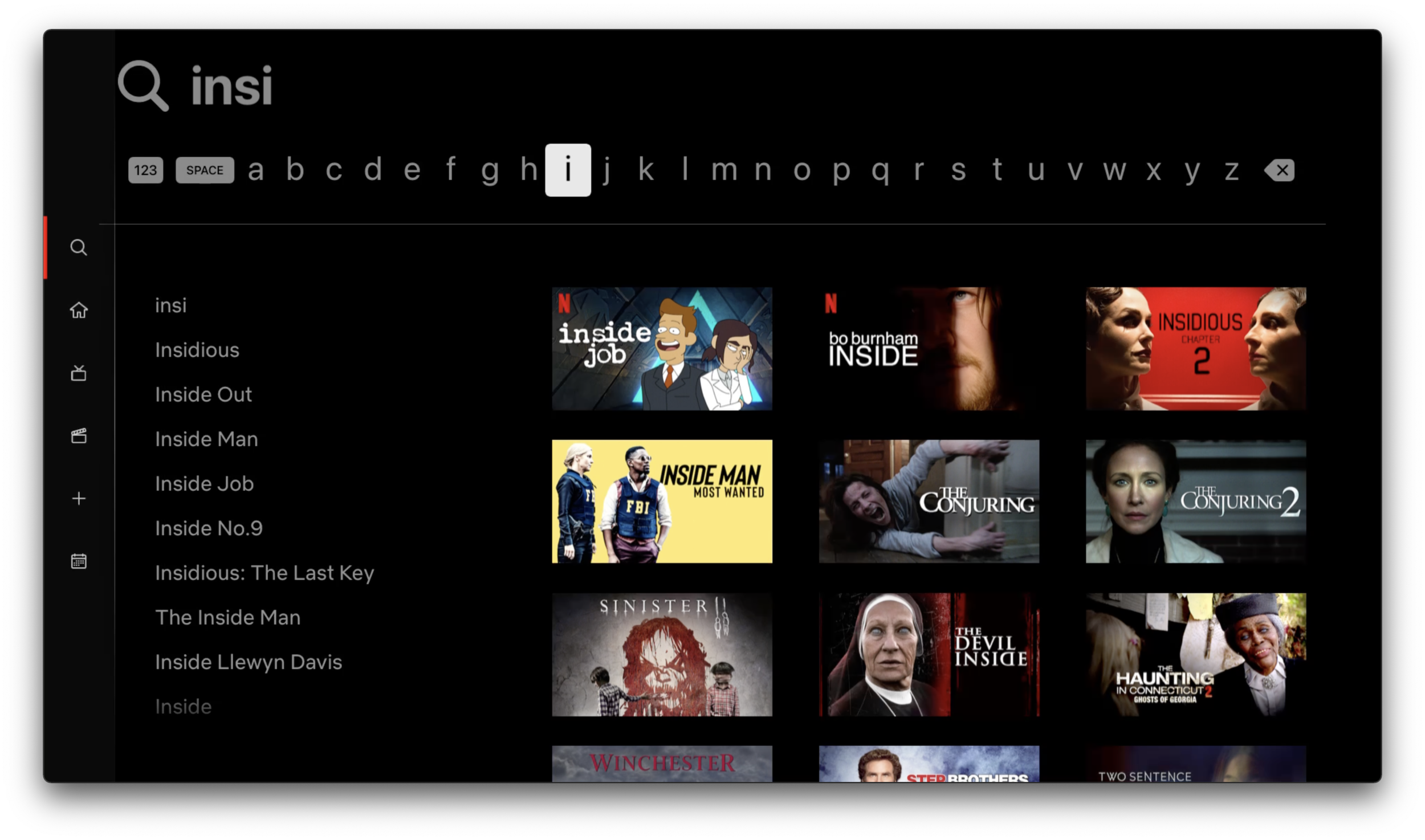

But that’s not why I want the Remote functionality on my Mac. There’s one feature that puts the iOS remote above a Siri or infrared remote: text entry. If you’ve had the displeasure of entering any kind of text on a TV with a remote control, you’ll know why this feature is helpful. Whether they come in a grid or a line, on-screen keyboards are the most infuriating part of streaming devices and smart TVs. Apple TV’s system-level keyboard used to use the grid-based keyboard until the launch of the Siri remote, which introduced the line-based keyboard. You can still use the grid-based one if you use an infrared remote, but some apps will force line-based input regardless—Netflix, I’m looking at you.

This horizontal nightmare is inflicted not just on Apple TV users, but also Chromecast and Roku owners too.

There’s an escape hatch to this line-and-grid prison if you’ve paired your iPhone or iPad to your Apple TV as a remote. When a text field pops up on screen, you’ll get a notification on your iOS device to enter some text. This text field behaves like any other, and you can type anything into it. Thumboarding on a phone is far quicker than pressing multiple buttons or swiping around using normal remote controls. It took fifteen seconds for me to type five letters using the awful horizontal arrangement. Unlocking my iPhone and using its keyboard cuts that time to two seconds. If you’re already in the Remote app, it’s even faster than that.

This is incredibly useful, and not just for finding the right film for your Friday night Netflix fix—this text field can summon password managers! If you’re like me and have uniquely generated random passwords for every single login—complete with numbers, special characters, and capital letters—entering them with an on-screen keyboard is torture. So it’s super handy to autofill or paste a password from Bitwarden instead of hunting and pecking with an on-screen keyboard! This feature’s been around for three years now on iOS devices, but it’s nowhere in sight for a Mac. People watch TV with their laptops, they AirPlay from laptops to TVs, and there could be TVs in rooms with desktop Macs. Given that Macs can now AirPlay to other Macs in Monterey, the absence of an Apple TV remote on the Mac is glaring.

The Mac OS Now Playing menu extra.

So how would you add this functionality to a Mac?Sure, a standalone application could do the job, but the Mac has many ways to add the controls. Let’s start with the Now Playing menu extra. Introduced in Big Sur, Now Playing is a quick shortcut to control media apps. Why not Apple TVs? Pull down the menu and you could play, pause, or fast forward whatever’s currently playing on any of the Apple TVs on your network. Easy peasy.

But Now Playing is fairly limited in terms of space, and shoving a full remote in there would be overkill. Along with Now Playing, a standalone Remote app can mimic all the functions of the iOS Remote app. Just bring it all over. Want to move through menus with your Mac’s trackpad like the Siri remote? Swipe away! Hate swiping? Use arrow keys or click on the buttons with a mouse! As for keyboard access, the app could show text prompts just like on iOS, but don’t forget about Notification Center. When a text prompt comes up on the Mac, it should be an interactive one that you can type into, just like Messages’ alerts. The next time a password or text prompt shows up, I won’t have to reach for my iPhone again! The lives of multi-screeners who use a TV and laptop at the same time will never be the same again!

Okay, okay—I admit, that’s a bit much. I know this feature won’t change the world, but the whole ethos that Apple is pushing these days is “ecosystem.” Macs should be part of the Apple TV remote ecosystem, just like they’re part of the AirPlay ecosystem. AirPlaying from my laptop to my Apple TV is one of the best ways to work through my daily YouTube queue, and I can pause, rewind, and fast forward using controls on my Mac. That’s been there since day one of AirPlay. Let’s get remote control and text entry on the same level.

Now, I know there’s some workarounds I could use right now. I do have a Bluetooth keyboard paired up with my Apple TV. I think it’s mixed in the drawer of game controllers and miscellaneous games in the entertainment center. But that keyboard can’t autofill passwords, and the goal is to avoid having to use a separate input device. Still, if you want to use one, it’s a valid option. Game controllers can control an Apple TV too, but they’re not that great at text input. Just ask Microsoft, who made an add-on keyboard for Xbox controllers.

“Just pick up your phone!” you say. Well, my phone might be another room. My Mac might be more convenient. Plus, my Mac has a real keyboard, and it’s easier to copy-n-paste passwords with a real pointer and keyboard.

“Use CiderTV or Ezzi Keyboard!” Yes, that’s true. They do exist. But this should be an operating system level feature. These apps also don’t have all the functionality of the Remote app, since they’re just emulating a bluetooth keyboard. Still, they are useful and their developer is filling a nice that Apple seems to be overlooking.

I’ve also been told that Roomie Remote has full support for controlling Apple TVs including text input, but $60/year is pretty steep for just that functionality alone. It looks like a very powerful utility with a lot of functionality, and in that context the $60 is likely justified. But for just reproducing the Apple TV remote app on a Mac, it’s overkill.

So, to Apple, I issue this challenge: let my Mac control an Apple TV. You’ll make a minor inconvenience disappear, and for that I would commend you.